Fig.1 The experimental vehicle The cameras on the left and right sides are aligned with the wheels, which can record the time and condition of the wheel when it’s on the solid line, and verify whether the division result of vibration data is correct. In addition, a 32-beams LiDAR is mounted on the front of the vehicle to facilitate its perception on the surroundings. |

Acquisition devices. The data acquisition equipment configuration is shown in Fig.1. The experimental vehicle is BaiQi Lite, the camera model is Basler acA1920-40, and the vibration signal acquisition sensor is a Siemens PCB 3-way ICP acceleration sensor, as shown in Fig.2. The detailed installation position of the sensor is: the front-view direction camera is installed on the front hood, and the left and right cameras are installed on the rear mirror respectively.

Fig.2 Siemens PCB 3-way ICP acceleration sensor |

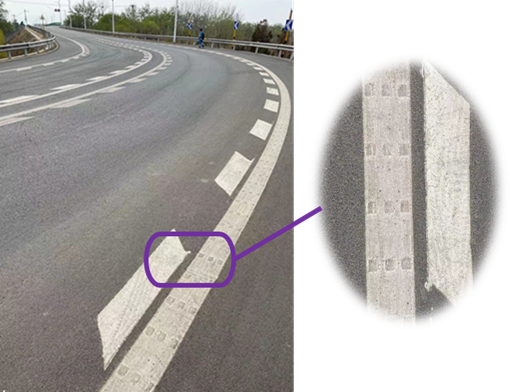

Fig.3. A convex vibration marking line |

Production process. From May 2021 to July 2021, we recorded lane line video from 17:00 to 19:00 using cameras(setting: 20Hz, 1920 x 1080) and vibration signal acquisition sensor(setting: 128Hz). We collected camera data and vibration data in scenes such as turning intersections and speed bumps in Beijing, China. The lane line we selected when we collected the data was a raised oscillation marking line, shown in the Fig.3. |

|

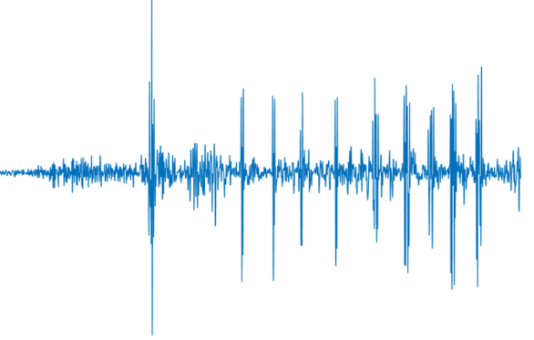

Vibration signal dataset. Since the existing data sets, such as KITTI, A2D2, etc., do not provide data sets on vibration, we have carried out synchronous collection of vibration and visual data. The visualization effect of the vibration signal is shown in the Fig.4. |

Fig.4. Vibration data visualization |

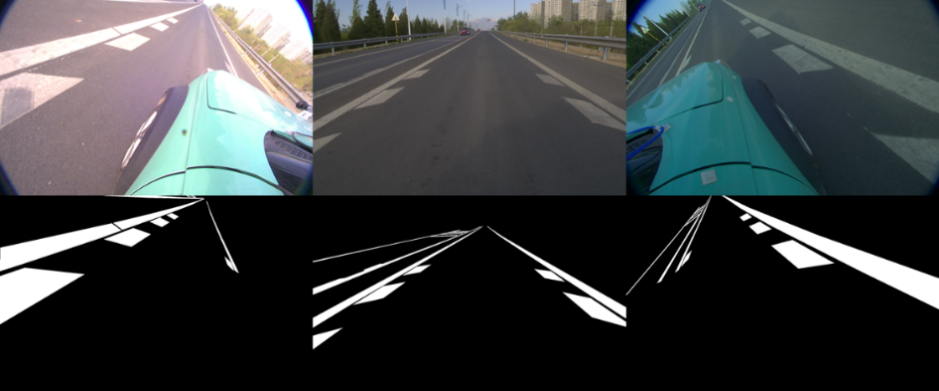

Fig.5. Images dataset visualization |

Images dataset.Most of the current datasets on lane detection focus on the front-view camera's record of the lane line ahead, while little attention is paid to lane line information from a lateral perspective, but it is this very information that makes a clear record of whether the wheel is on the solid line. Thus, we've released a new lane detection dataset using lateral cameras’ records. This dataset contains a variety of scenes, such as straight lines(top left), dashed lines(top middle), curves(top right), occlusion(bottom left), daytime(bottom middle), dusk(bottom right), etc. We split the entire video into frames with total size being 8G, and those video frames were then divided into two categories, i.e., on the lane line and no on the lane line, then we sample the data by data cleaning . In order to facilitate the work utilizing continuous information, we distribute the dataset into 158 folders, each containing at least 4 continuous video frames. The images dataset visualization is shown in Fig.5. |

The VBLane dataset is published under CC BY-NC-SA 4.0 license. It can be downloaded for free for academic research.

Thank Datatang( https://www.datatang.ai , stock code: 831428), a leading artificial intelligence data service provider in the world, for providing tailored data services. Several of its annotation platforms have managed to cut down data processing costs by integrating automatic annotation tools. With high-quality training data services in place, Datatang has helped thousands of AI enterprises around the world improve the performance of AI models.