1. What does V2X-Radar provide?

V2V4Real is the first extensive real-world multi-modal dataset featuring 4D Radar designed for cooperative perception. It features:

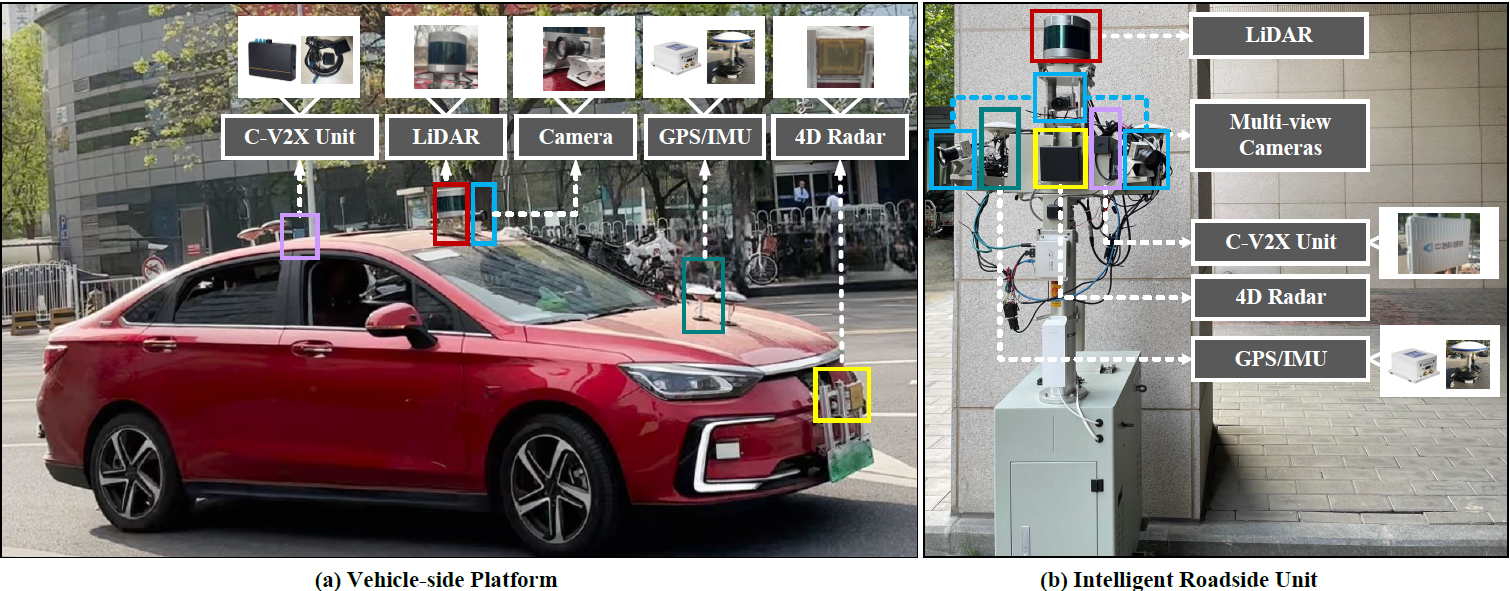

More modalities: The proposed V2X-Radar dataset includes three types of sensors: LiDAR, Camera, and 4D Radar, enabling further exploration into 4D Radar-related cooperative perception research.

Diverse scenarios: Collected data covers diverse weather conditions (sunshine, rain, fog, snow) and times of day (daytime, dusk, nighttime), focusing on complex intersections that pose challenges for single-vehicle autonomous driving. These scenarios feature obstructed blind spots that impact vehicle safety, providing varied corner cases for cooperative perception research.

Multi-task support: The dataset is further divided into three data subsets, with V2X-Radar-C supporting collaborative perception tasks, V2X-Radar-I supporting roadside perception tasks, and V2X-Radar-V supporting vehicle-end perception tasks.

We carefully curated a diverse dataset from 540K driving frames, selecting 20K LiDAR scans, 40K camera images, 20K 4D Radar samples, and annotating 350K 3D bounding boxes across 5 vehicle categories.

2. Hardware Platform

3. Paper + Github

Paper:https://arxiv.org/pdf/2411.10962

Github:https://github.com/yanglei18/V2X-Radar

4. Download

Sample-mini: https://drive.google.com/drive/folders/11zq-v9GBdFv_tnpKd3EkS1mbSNzZf0RT?usp=sharing

Trainval-full: Release Soon